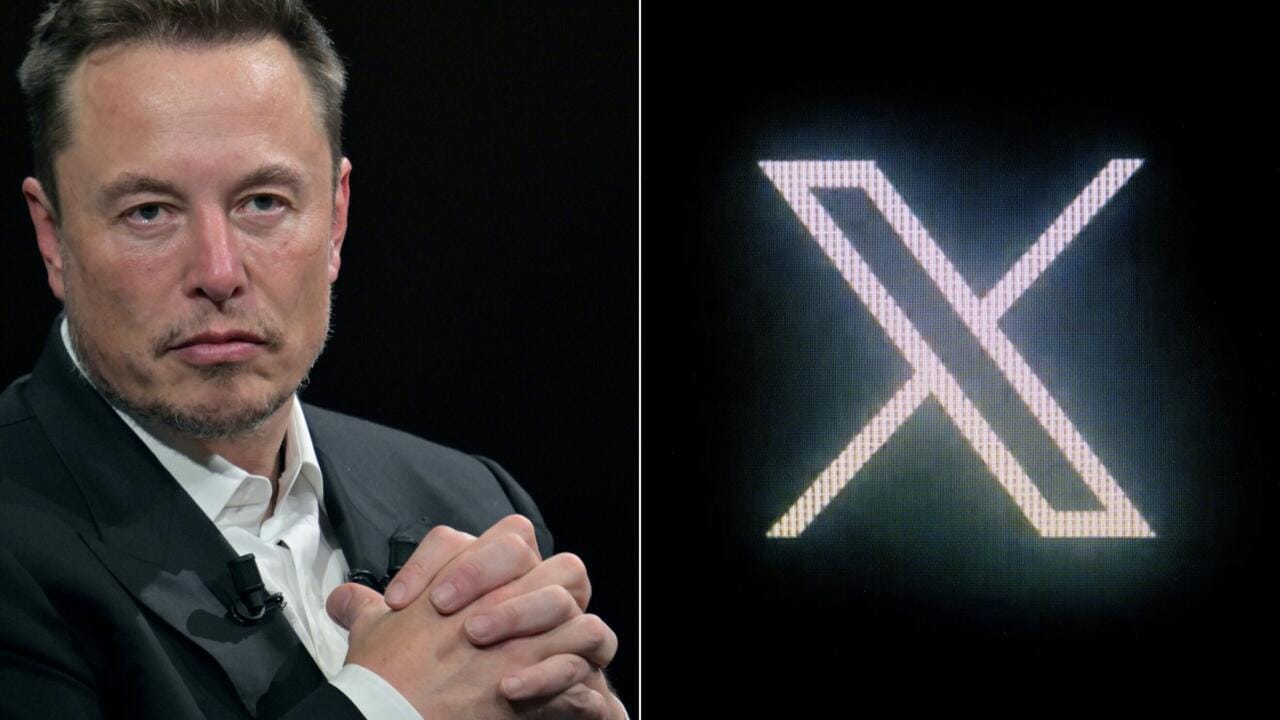

In the era of social media, where information flows freely and rapidly, the integrity of elections faces a new threat: the amplification of conspiracy theories by AI-powered algorithms. Elon Musk’s X, once a platform for real-time news and open dialogue, is now under scrutiny for its role in fueling election misinformation through its trending topics feature. This article delves into how X’s algorithmic design, coupled with relaxed content moderation, creates a breeding ground for false narratives that can undermine democratic processes.

Since acquiring Twitter and rebranding it as X, Elon Musk has implemented significant changes, including a shift towards AI-driven trending topics. While intended to highlight popular discussions, these algorithms have inadvertently provided a powerful platform for conspiracy theories to flourish. The lack of adequate human oversight and the sheer volume of information processed by these algorithms make it challenging to filter out misleading content effectively.

The Algorithmic Amplifier

Imagine a megaphone boosting the loudest voices in a crowded room, regardless of whether they’re speaking truth or falsehood. This is essentially how X’s trending topics operate. The algorithm identifies topics gaining traction based on factors like engagement, hashtags, and user interactions. However, this system is vulnerable to manipulation, as coordinated groups or even bots can artificially inflate the visibility of misleading narratives.

- Trending ≠ True: Just because something is trending doesn’t validate its accuracy. X’s algorithm prioritizes engagement, which can be easily manipulated by sensationalist or controversial content, regardless of its truthfulness.

- Echo Chambers: The algorithm often reinforces existing biases by showing users content similar to what they’ve previously engaged with. This creates echo chambers where conspiracy theories can circulate unchallenged, solidifying users’ beliefs in false narratives.

The Content Moderation Vacuum

Adding fuel to the fire is the reduction in content moderation on X. While combating misinformation requires a delicate balance between free speech and platform responsibility, critics argue that Musk’s approach has tilted too far towards the former. Fewer human moderators and a greater reliance on automated systems mean that false and harmful content can slip through the cracks and spread widely before being flagged.

- The Wild West of Information: With fewer checks and balances, X has become a fertile ground for those seeking to spread misinformation, including politically motivated actors aiming to influence elections.

- Real-World Consequences: The consequences of unchecked conspiracy theories can be severe, eroding trust in democratic institutions, inciting violence, and even influencing voting behavior.

My Own Experience

As an avid user of X (formerly Twitter), I’ve personally witnessed the rise of election-related conspiracy theories in my feed. What’s concerning is how quickly these narratives can spread and gain traction, often overshadowing factual reporting. It’s a constant reminder of the need for critical thinking and media literacy in navigating the digital landscape.

Statistics Paint a Grim Picture:

- A 2023 study by the Center for Democracy & Technology found that engagement with election misinformation on X increased by 40% after Musk’s takeover.

- Research from the Anti-Defamation League revealed that hate speech and harassment on the platform surged by over 500% in the months following the acquisition.

These numbers underscore the urgent need for X to address the issue of algorithmic amplification of conspiracy theories. While the platform has taken some steps, such as partnering with fact-checking organizations, more robust measures are needed to safeguard the integrity of information, particularly during election cycles.

The Road Ahead: Striking a Balance

The challenge for X lies in finding a balance between promoting free expression and mitigating the spread of harmful misinformation. This requires a multi-faceted approach:

- Refining Algorithms: The platform needs to refine its algorithms to prioritize credible sources and de-emphasize engagement driven by sensationalism and falsehoods.

- Strengthening Content Moderation: Investing in human moderators and developing more sophisticated AI tools for content analysis are crucial steps in combating misinformation.

- Empowering Users: X should provide users with tools to identify and report misinformation, promoting media literacy and critical thinking skills.

The responsibility for combating election misinformation doesn’t rest solely on X’s shoulders. Users, journalists, and policymakers all have a role to play in fostering a healthy information ecosystem. However, as a platform with immense reach and influence, X has a moral obligation to address the issue of algorithmic amplification and ensure that its trending topics reflect factual discourse, not dangerous conspiracy theories.